The main objective of this proposal is to integrate the ROS2 libraries for iOS and Android operating systems in the smartphone-based Robobo robot (https://theroboboproject.com/en/). These libraries have been developed by Esteve Fernandez (https://github.com/esteve/ros2_objc), and allow that ROS2 users have direct access to the main sensors, actuators, communications and services of current smartphones. Their integration with the Robobo Framework will allow the ROS2 community to use their smartphones in a mobile robot, creating a very powerful low-cost platform. Moreover, a Gazebo simulation model of Robobo will be carried out, and made available opensource. Finally, three state-of-the-art artificial vision algorithms that run natively on the smartphone, will be developed and provided to the ROS2 community too.

As a result of the completion of this project, the smartphone apps for Android OS and iOS are available at Google Play and Apple App Store, so the Robobo robot can be programmed through ROS2 from any compatible computer. Users can download the ROS2 libraries that allow to access to all the sensing topics developed for Robobo, which provide information from most of the internal sensors of the smartphone (gyro, accelerometer, microphone, camera, battery, etc), and to all the sensors of the Robobo base (infrared, motor encoders and battery). Moreover, the libraries allow to send commands to the robot in other ROS2 topics and services, so users can use the smartphone speaker, move the base motors or turn on the LEDs. All these ROS2 libraries are open source, and they are accessible at MINT public repository in Github (https://github.com/mintforpeople/robobo-programming/wiki/Using-Robobo-ROS2). In it, we have included a description of the content and functionality of each part of the RosBOBO project, which is structured in independent repositories. The documentation, including tutorials to start using Robobo in ROS2 with Android and iOS smartphones, is available at Robobo WIKI (https://github.com/mintforpeople/robobo-programming/wiki/ROS2).

In addition, new computer vision algorithms run onboard Robobo’s smartphone, and are available through ROS2. As the algorithms run natively in the smartphone, the transmission of the image through the WIFI is avoided and, consequently, the response speed of the robot is increased when facing real time problems. Specifically, we have implemented an object detection module in the Robobo Framework (link). It is an adaptation of the one included in the Tensor Flow lite library (link). With it, we are able to detect more than 100 different types of objects in real time, with a very fast detection speed. Moreover, we have implemented an Aruco marker detection module in the Robobo Framework (link). It is an adaptation of the library available at OpenCV for April Tag and Aruco markers (link). This library has been modified to run natively in the smartphone OS too, providing the user with direct and simple information about the detected tag, and avoiding the image processing stage on the computer.

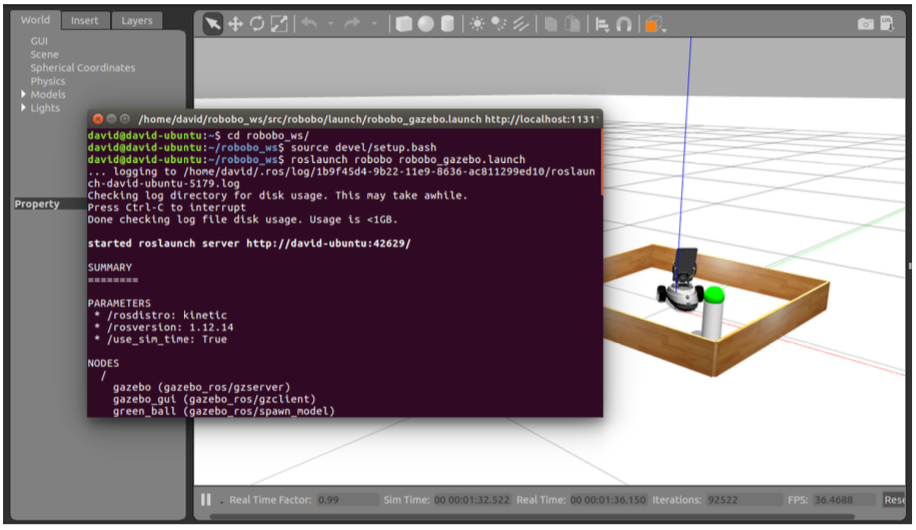

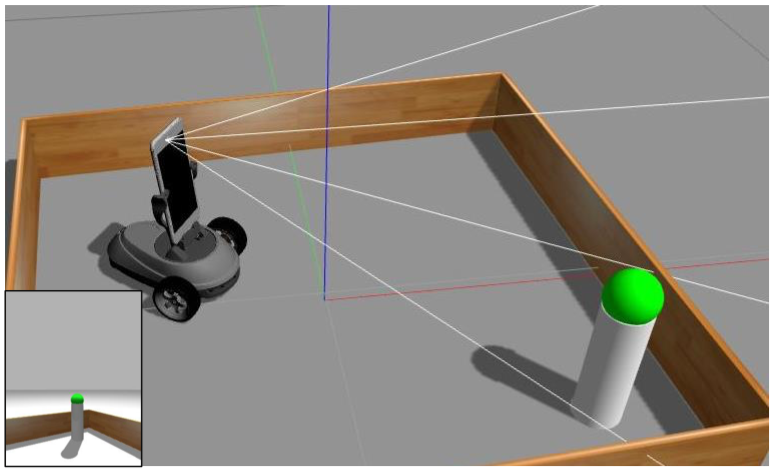

Finally, a Gazebo model of Robobo, including the smartphone, has been developed too, so the robot can be programmed in simulation using ROS, and then such code can be easily transferred to the real platform. Specifically, users can download the SDF file that contains the physical model of the base and a generic smartphone, and they can load it in Gazebo 7. The model can be programmed through ROS, using topics that provide information from the smartphone’s camera and IMU, in addition to that of the Robobo base like the infrared sensors and all the motor encoders. Furthermore, the model can be controlled using standard ROS services to access to the wheel motors and pant-tilt unit motors. The package with the model and the read me file is completely open source, and it can be downloaded from https://github.com/mintforpeople/robobo-gazebo-simulator. In addition, we have included a short tutorial at the Robobo wiki: https://github.com/mintforpeople/robobo-programming/wiki/Gazebo.